1. std::list splice

A splice member function can transfer node(s) from one std::list to another by rearranging only the linked-list's pointers. The elements within the list nodes are not copied or moved. Therefore, the already existing references and iterators to the elements are not invalidated.

Please note that we are carefully choosing the word 'move' in this article because move has a special significance in C++. We use 'move' only in the context of move-semantics and use different words ('transfer' or 'shift') in any other fitting sense.

As an example of splicing, the following code appends all nodes of the listA to the listB without invalidating the references and iterators to the listA's elements:

//Note: C++17 required below. (for CTAD)

std::list listA{1,2,3};

std::list listB{4,5,6};

auto it = listA.begin(); //Iterator to 1

//Append listA to listB

listB.splice(listB.end(), listA);

//All listA elements transferred to listB

std::cout << listB.size() << " "

<< listA.size() << std::endl; //6 0

//Prints Below: 4 5 6 1 2 3

for(auto i : listB)

std::cout << i << " ";

std::cout << std::endl;

//Iterator still valid

std::cout << *it << std::endl; //1

We can transfer the elements without splicing — but that requires erasing the source list elements and inserting them into the destination list. Erasing and inserting the elements is acceptable for small objects (e.g., int), but it can be costly for larger objects because of copy/move and destruction.

There are a few overloads of the std::list splice function for transferring all nodes, a specific node, or a range of nodes. These functions can transfer nodes from one list to another or change nodes' positions within a list. In all the cases except one, the time complexity of a splice is constant O(1). The only case where the complexity is linear is when a range of (not all) nodes are transferred from one list to another. There is a bit of history related to the splice's complexity being an argument against having the O(1) complexity of size(). Check out this article by Howard Hinnant for the background.

A notable property of splicing, which is important for the rest of this article, is that we can transfer a node from one position to another even within a list. For instance:

std::list<std::string> strList{"A", "B", "C"};

//Transfers "C" to the front (before "A")

strList.splice(strList.begin(), strList, --strList.end());

//Prints Below: C A B

for(auto& str : strList)

std::cout << str << " ";

We will design and implement a practical application of splice in the next section, where we need to transfer an element from any position to the front of a list efficiently.

2. LRU Cache

A Least Recently Used (LRU) cache is a limited capacity cache that discards the least recently used item to make room for a new item when it is full.

We want to create a generic key-value LRU cache that has an interface to add a key-value pair, retrieve a value for a given key, and erase a key-value item. The operations to add, retrieve, and erase should have constant O(1) average time complexity.

2.1. Design

A standard hash-map (e.g., std::unordered_map) can serve as a key-value cache for adding and retrieving the items with average O(1) complexity. We can also enforce a size limit on this cache. However, an LRU cache has another implied order in which the items are arranged according to least (most) recent usage.

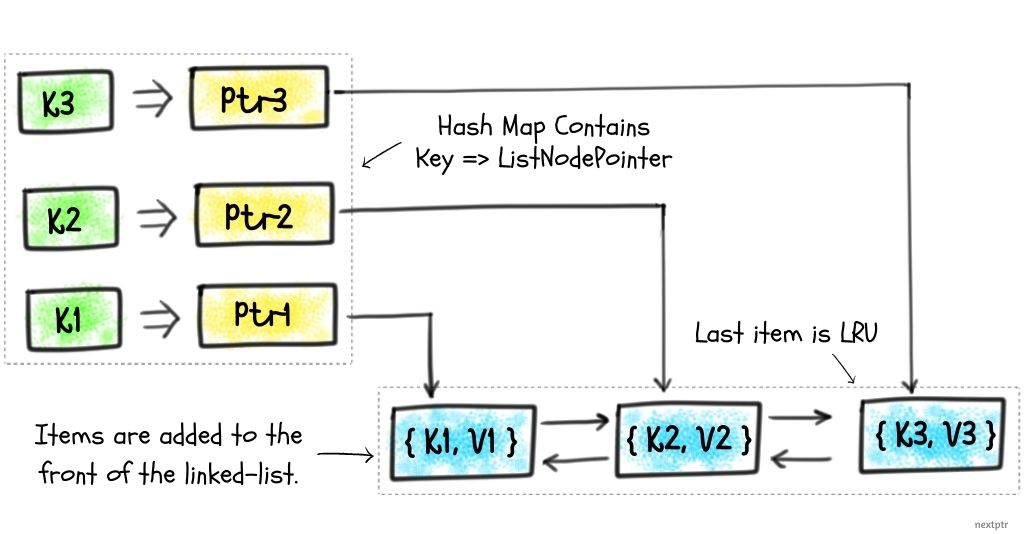

Therefore, we will use two data structures for the implementation here: a linked-list and a hash-map.

The linked-list stores the key-value pairs in the recency of usage order, and the hash-map contains the pointers to the linked-list nodes against the keys. Therefore, the hash-map acts as an index for faster (O(1)) access to the linked-list's items.

The most-recently-used item is the first node of the linked-list, and the least-recently-used item is the last node. We add a new item to the front of the list and remove the last (LRU) item if the cache is full. When an item is accessed, it is transferred to the front of the list. Shifting a linked-list node is an O(1) complexity operation when we have the node pointer.

It should be clear by now why we have chosen linked-list instead of array-list. It is because we need to shift an element to the front of the list when it is accessed. Changing a node's position within a linked-list involves adjusting internal pointers and does not invalidate the external pointers — remember that we are storing list node pointers in the hash-map.

Here is the schematic of our design:

2.2. Implementation

We are using std::list and the std::unordered_map for the linked-list and the hash-map, respectively. Here is the interface of the LRUCache:

//Note: C++17 required.

//K - Key, V - Value, Capacity - Max Size

template<typename K, typename V, size_t Capacity>

class LRUCache {

public:

//Assert that Max size is > 0

static_assert(Capacity > 0);

/*Adds a key=>value item

Returns false if key already exists*/

bool put(const K& k, const V& v);

/*Gets the value for a key.

Returns empty std::optional if not found.

The returned item becomes most-recently-used*/

std::optional<V> get(const K& k);

//Erases an item

void erase(const K& k);

//Utility function.

//Calls callback for each {key,value}

template<typename C>

void forEach(const C& callback) const {

for(auto& [k,v] : items) {

callback(k, v);

}

}

private:

/*std::list stores items (pair<K,V>) in

most-recently-used to least-recently-used order.*/

std::list<std::pair<K,V>> items;

//unordered_map acts as an index to the items store above.

std::unordered_map<K, typename std::list<std::pair<K,V>>::iterator> index;

};

The put method adds a key-value pair. For simplicity, it does nothing and returns false if a key already exists. If the cache is full, it removes the last (LRU) item in the list. And finally, the new item is always added to the front of the list. The map (index) is kept updated accordingly:

template<typename K, typename V, size_t Capacity>

bool

LRUCache<K,V,Capacity>::put(const K& k, const V& v) {

//Return false if the key already exists

if(index.count(k)) {

return false;

}

//Check if cache is full

if(items.size() == Capacity) {

//Delete the LRU item

index.erase(items.back().first); //Erase the last item key from the map

items.pop_back(); //Evict last item from the list

}

//Insert the new item at front of the list

items.emplace_front(k, v);

//Insert {key->item_iterator} in the map

index.emplace(k, items.begin());

return true;

}

The get method returns a value for a given key. It returns a std::optional, which contains a value or is empty depending on if the item is found or not. Before the found value is returned, the accessed item is transferred to the front of the list by splicing. This splicing operation has constant complexity and involves no copy or move:

template<typename K, typename V, size_t Capacity>

std::optional<V>

LRUCache<K,V,Capacity>::get(const K& k) {

auto itr = index.find(k);

if(itr == index.end()) {

return {}; //empty std::optional

}

/*Use list splice to transfer this item to

the first position, which makes the item

most-recently-used. Iterators still stay valid.*/

items.splice(items.begin(), items, itr->second);

//Return the value in a std::optional

return itr->second->second;

}

The erase method is the simplest and quick. It erases the found item from the list and the map:

template<typename K, typename V, size_t Capacity>

void

LRUCache<K,V,Capacity>::erase(const K& k) {

auto itr = index.find(k);

if(itr == index.end()) {

return;

}

//Erase from the list

items.erase(itr->second);

//Erase from the map

index.erase(itr);

}

2.3. Test

We can test the LRUCache as follows:

//Prints all items of an LRUCache in a line

//Items are printed in MRU -> LRU order

template<typename C>

void printlnCache(const C& cache) {

cache.forEach([](auto& k, auto& v){

std::cout << k << "=>" << v << " ";

});

std::cout << std::endl;

}

int main() {

//City -> Population in millions (Max size 3)

LRUCache<std::string, double, 3> cache;

//Add 3 entries

cache.put("London", 8.4);

cache.put("Toronto", 2.5);

cache.put("Sydney", 5.2);

//Prints Below: Sydney=>5.2 Toronto=>2.5 London=>8.4

printlnCache(cache);

//Make "London=>8.4" the most recently accessed

std::cout << "London=>"

<< cache.get("London").value_or(-1)

<< std::endl; //London=>8.4

//Prints Below: London=>8.4 Sydney=>5.2 Toronto=>2.5

printlnCache(cache);

//This would remove the LRU item (Toronto=>2.5)

cache.put("Tokyo", 9.4);

//Prints Below: Tokyo=>9.4 London=>8.4 Sydney=>5.2

printlnCache(cache);

return 0;

}

3. Conclusion

In this article, we explored the list splicing and a real-life example of it. std::list splicing feature has been there from start, but its ability to transfer a node within a list could go unnoticed.

List splicing is also conceptually related to the map (set) node extraction technique introduced in C++17, which is the foundation of splicing in associative containers. Read the nextptr article, "Update keys of map or set with node extract" to learn about that.